LocalLibrary: Local RAG System for Documents - Chat with files

LocalLibrary is a powerful feature in Braina that enables Retrieval-Augmented Generation (RAG) capabilities for your local documents. It allows you to have intelligent conversations with your personal files while maintaining complete privacy since everything happens on your device when you use LocalLibrary with Local AI models.

LocalLibrary is an excellent tool for researchers, professionals and students who need to interact with their document collections efficiently. Whether you're analyzing reports, studying materials, or seeking specific information, LocalLibrary provides a natural language interface to your personal knowledge base.

LocalLibrary allows you to create collections of documents and allows you to chat with them using either local or cloud-based LLMs. It creates embeddings of your documents, enabling semantic search and contextual responses based on your document content.

Key Features of LocalLibrary RAG:

- Privacy: On-device document indexing and embedding. Use local AI models to chat with your documents. Optionally, you can use cloud LLMs to chat with your documents if your hardware doesn't support local AI models. Even when you use cloud AI models with Braina, your conversations remain private and are not used for training.

- Multiple File Formats: LocalLibrary supports multiple file formats like PDF, Word files (.docx), RTF, plain text files, markdown etc. You can customize the allowed file extensions to index in LocalLibrary settings.

- Customization: Braina provides customizable settings for optimal RAG performance. You can customize the snippet size, file extensions to index and no. of snippets to send to LLM with your prompt.

- Free: LocalLibrary works even in the free version. Avoid subscription fees associated with cloud-based AI services.

- Multiple Embedding Choices: There are multiple open-source embedding models (including multilingual ones) supported by Braina. You can use the one that fits your requirements.

- Automatic re-indexing: Braina will automatically re-index the collection when you add or remove files from the associated folder.

- Diverse Data Source Integration: Enhance your information retrieval experience by merging the LocalLibrary feature with Braina's other powerful functionalities, such as Persistent Chat Memory, attachment of Files, Images, Screenshots, and Webpages, Audio/Video file transcriptions and Deep Web Search.

- TTS and Speech Recognition: Using Braina's Dictation mode, you can effortlessly create queries or prompts simply by speaking and hear responses in a lifelike TTS voice, making communication feel natural and engaging. Braina supports both local and cloud based TTS and speech recognition.

Getting Started with LocalLibrary

To access LocalLibrary feature, press Ctrl+Shift+D in Braina's Window. This will open LocalLibrary panel on the right side of Braina's window. Alternately, you can also click on the LocalLibrary button on the toolbar to open the panel.

Create Documents Collection

- Click on the + button in the LocalLibrary panel to add new collection. If this is your first time, wait for the embedding model download to complete and click on + button again after the download is completed.

- Give your collection a meaningful name.

- Select the folder containing your documents.

- Wait for the indexing process to complete.

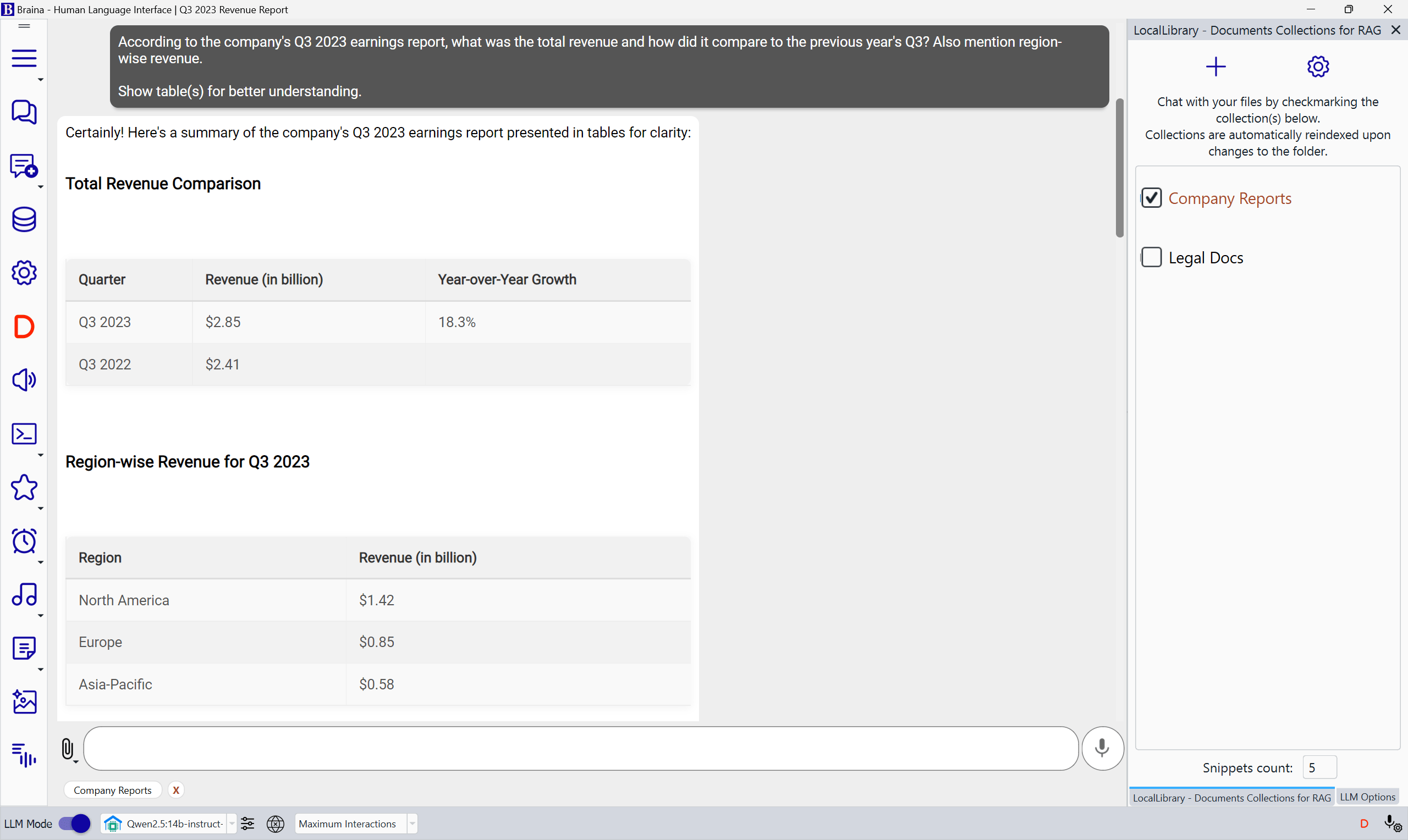

Chatting with collections of documents

- Checkmark the collection you want to chat with in the LocalLibrary panel.

- Type your question/prompt in the main chat interface.

- Braina will automatically retrieve relevant information from your documents and provide contextual responses using the selected LLM model.

Tip: To view the source files referenced in the answer, you can either instruct the LLM in the prompt itself to list the sources, or modify the model's system prompt via the LLM options panel so that you don't have to request the LLM to show the reference sources each time.

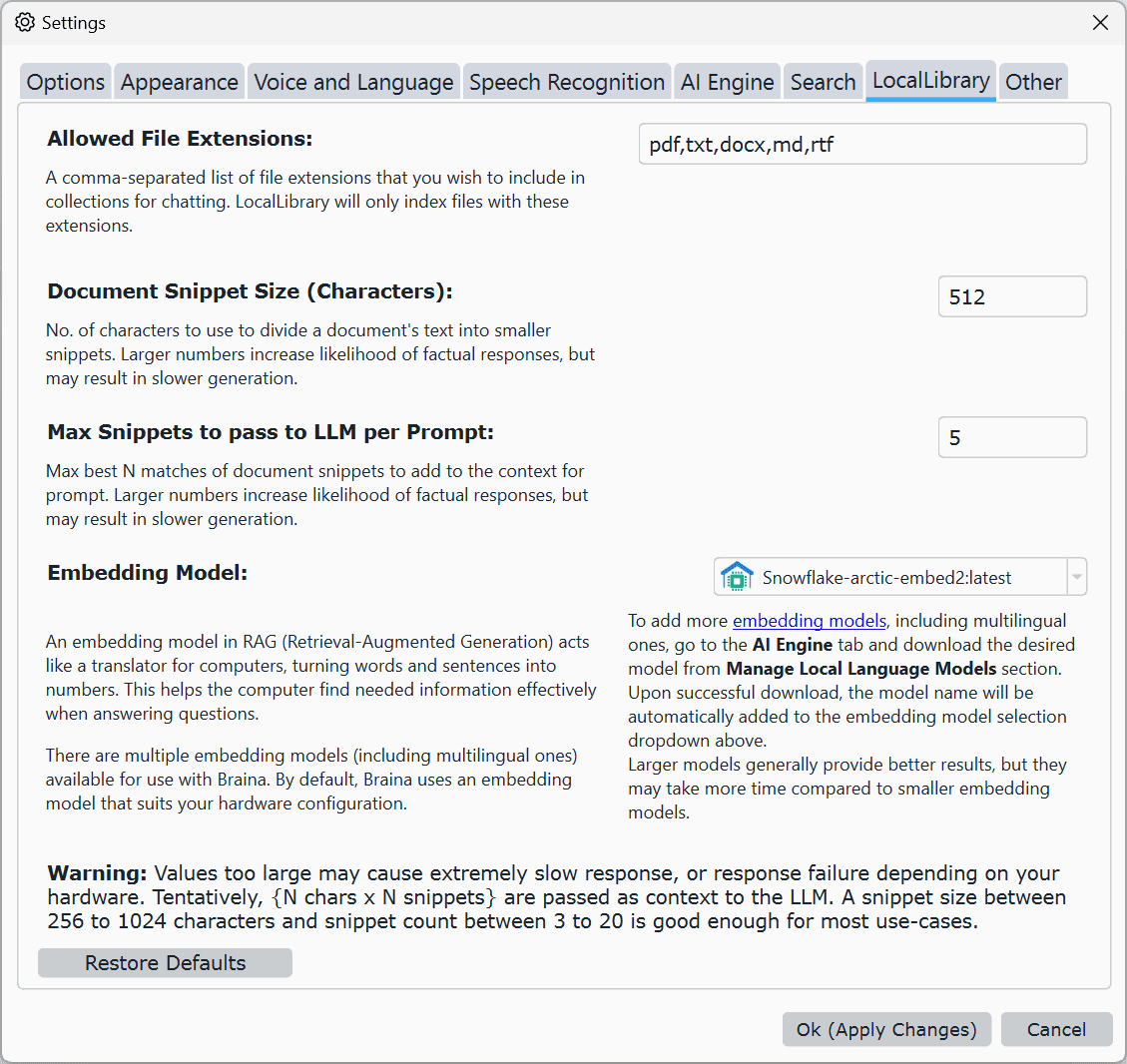

LocalLibrary settings

You can access LocalLibrary settings by clicking on the Settings button on the LocalLibrary panel.

- Allowed file extensions: Provide a comma separated list of file extensions.

- Snippet Size: Controls how documents are divided (recommended: 256-1024 characters)

- Max Snippets Count: Number of relevant snippets used for context per prompt (recommended: 3-20). Larger values provide more context but may slow down responses especially if you are using local AI model.

- Embedding model: Select the embedding model to use for indexing. There are multiple embedding models available to use with Braina. To use an embedding model, you can download the model similarly to how you download local AI models in Braina from Settings -> AI Engine -> Manage Local Language Models. For list of embedding model tags see: Embedding models library.

Tip: Larger embedding models offer better accuracy but require more processing power and indexing time. If you have a slow computer, use a small embedding model like all-minilm. Remember that performance depends on your hardware capabilities, so adjust settings accordingly for optimal results.

Collection management

You can right click on a collection name in the LocalLibrary panel to manage it. The available options are:

- Open folder: Opens the associated folder containing the source documents.

- Add file(s): Add additional files to the collection.

- Remove collection: Deletes the collection from LocalLibrary. Please note that the source files are not deleted.

- Recreate Collection: Rebuilds the collection index from scratch. Might be needed if you change settings such as snippet length or embedding model.

Tip: You can hover the mouse cursor over the collection name to see information such as the number of indexed files and words in the collection, folder path, embedding model, and last update date and time. If you see a warning sign instead of a checkbox for a collection, there might be some issues. You can view notes related to the issue either by hovering over the collection name or right-clicking on it.