Run Local LLM / AI Language Models: Your Private Chatbot on Your Windows PC

One of the most exciting feature of Braina is the ability to run language models (often called Large Language Models - LLM or Small Language Models) locally on your personal computer. This guide will walk you through the process step-by-step and explore how you can use Braina to harness the power of AI language models without relying on cloud-based services (like ChatGPT, Gemini, Claude etc.), ensuring absolute privacy and control over your data.

Why Run AI Language Models Locally?

Before we dive into the how-to, let's understand why you might want to run AI language models on your own computer:

- Privacy: Your conversations and data always remain on your device. This means your interactions are not accessible by any external company, ensuring complete privacy.

- Offline Access: Use AI capabilities without an internet connection.

- Customization: Tailor the model to your specific needs and preferences.

- Free: Avoid subscription fees associated with cloud-based AI services.

- Uncensored: Some uncensored language models offer unrestricted conversations on various topics without excluding content deemed sensitive or controversial.

- Learning Experience: Gain hands-on experience with AI technology.

- Personal AI Chatbot: Local AI Models with personality can work as a personal assistant chatbot.

- Multiple Choices: There are hundreds of free language models available from various vendors, trained for different tasks.

Getting Started with Local AI Language Models

Braina is the best, free and user-friendly software for running local AI language models on your own Windows computer. Braina simplifies the process of downloading and running language models. Braina supports CPU and GPU (both Nvidia/CUDA and AMD) for local inference, offering significant flexibility and versatility. Moreover, whenever new AI models are launched, they quickly become available in Braina for use.

Advanced Features

Braina offers several advanced features that work with local language models:

- Voice Interface: Use text-to-speech and speech-to-text capabilities.

- Web Search: Integrate internet search results into your AI interactions.

- Multimodal Support: Work with both text and images.

- Use AI response anywhere: Braina can automatically type AI response from local model in the software or website that is currently open and has focus. This means you can write article, email, programming code directly in any software (for e.g., MS Word, WordPress, Gmail, Visual Studio etc.) without having to copy-paste. To know more about this feature, refer "How to use advanced AI chat directly in any software or website?"

- File Attachments: Analyze files directly with your offline AI assistant.

- Cloud AI support: If the local model is not smart enough for a particular complex task, you can always switch to cloud large language models like OpenAI's ChatGPT, Anthropic's Claude or Google's Gemini. Even when you use cloud AI models with Braina, your conversation remains private and it is not used for training.

- Fastest Inference: Braina provides the best inference engine that provides faster speed (tokens/second) than any other local AI model inference program.

Setting up Braina and Local Language Models

- Download and Install Braina: Visit and download the Braina's latest setup file from here: https://braina.ai/download. Follow the on-screen instructions to install Braina on your Windows PC.

- Hardware Consideration: While running AI models locally is becoming more accessible, it's essential to consider your computer's capabilities while choosing the model to run. Larger models may require more powerful hardware. Consider using quantized models (e.g., q4_0 or q5_K_M) for better performance on less powerful machines. Also, ensure you have sufficient memory (RAM/VRAM) and storage to accommodate the AI models you wish to run. If you have a less powerful CPU/GPU, try using small models with less parameters like Qwen2 1.5B (qwen2:1.5b)

- CPU vs. GPU: Braina automatically detects whether you have a GPU and utilizes it for better performance. If not, it runs on your CPU. Support for CPU and NVIDIA GPU is provided by default. If you want to use an AMD GPU for running AI models, you will need to download and install an additional software: AMD ROCM Support for AI Models

- Downloading Local AI Models: Braina supports various language models (in GGUF/Ollama/Llama.cpp format), including popular ones like Meta's Llama 3.1, Qwen2, Microsoft's Phi-3, and Google's Gemma 2. Follow the steps below to download language model(s).

- To find available models, visit: AI LLM Library and copy the model tag of the one which best suits your requirements.

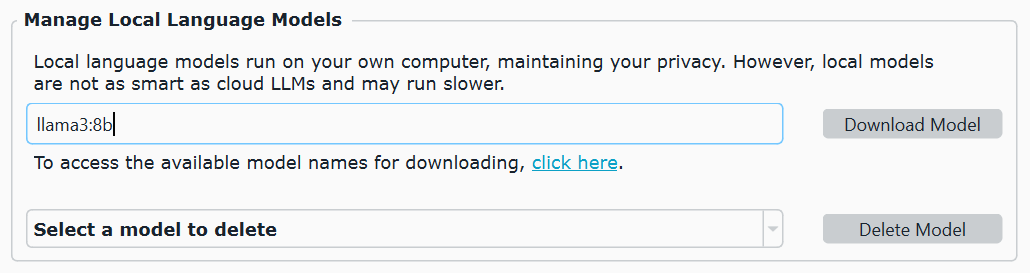

- In Braina, go to Settings (Ctrl+Alt+E) > AI engine tab. Under the "Manage Local Models" section, paste the model tag that you have copied in the above step and click "Download Model" button.

- Wait till the model has finished downloading. You can also click on the "Download in Background" button to hide the download progress dialog but keep Braina running to make sure that the download is not interrupted.

- Downloading Models from Hugging Face: To download any GGUF model directly from the Hugging Face Hub for use in Braina, follow these steps:

- Navigate to the specific model page on Hugging Face that you wish to use.

- Copy both the username and the repository name associated with the model.

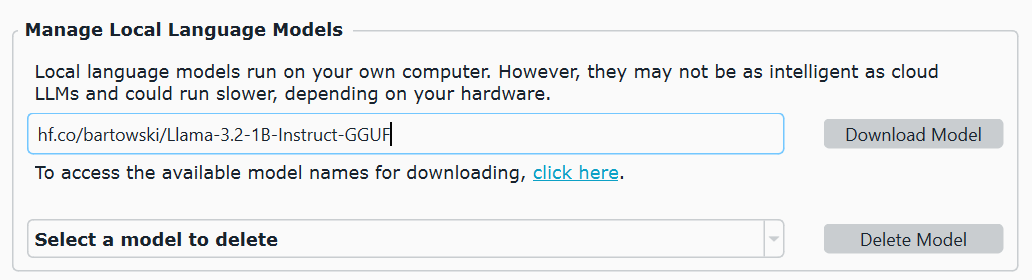

- Under the "Manage Local Models" section in Braina, input the tag in this format: hf.co/{username}/{repository}. For example: hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF. For downloading a quantized version of the model, specify the tag with the desired quantization level appended to the repository name in this format: hf.co/{username}/{repository}:{quantization}, For e.g.: hf.co/bartowski/Llama-3.2-1B-Instruct-GGUF:Q5_K_M

- Click "Download Model" button.

- Run Language Model: Once the download is complete, select the model from the Advanced AI Chat/LLM selection dropdown menu on the status-bar, enable "LLM Mode" switch and begin chatting with the AI model. All local AI language models can be identified by the

icon. If you can't see the model name in the dropdown list, just turn the LLM Mode switch off and then turn it on again to refresh.

icon. If you can't see the model name in the dropdown list, just turn the LLM Mode switch off and then turn it on again to refresh. - LLM Options: You can access various advanced LLM options for the currently selected LLM like context length, system prompt, temperature etc. by pressing Ctrl+O keyboard shortcut or by clicking on the LLM option

icon available on the right side of the Advanced AI Chat/LLM selection dropdown menu on the status-bar.

icon available on the right side of the Advanced AI Chat/LLM selection dropdown menu on the status-bar.